The instructions below have been customized for your project "".

Customize these instructions for the project

Start a crawl

Obviously, the first step to use the redirection.io crawler is to start a crawl. In this page, we will explain how to start a crawl, and what are the different options you can set.

Start a crawl from an URL

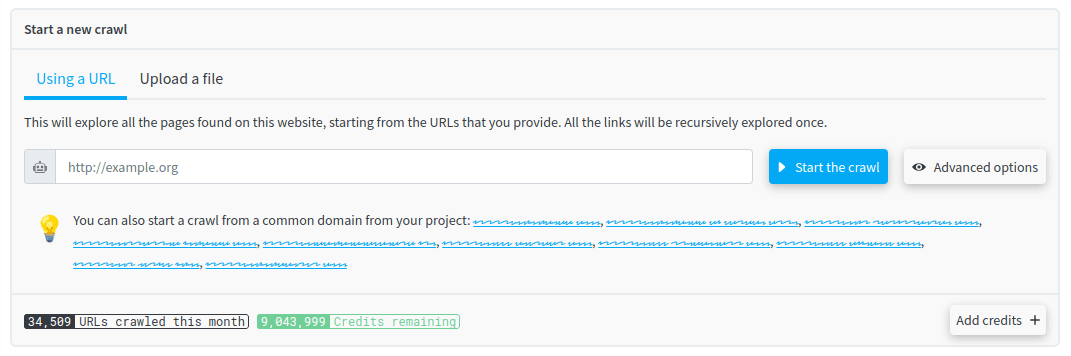

From the crawler main page, you can start a new crawl:

You must fill the input with an URL, and click on the Start the crawl button. Then, the redirection.io crawler will start downloading this page, and it will follow all the links contained in this page, and so on, recursively.

If you have already started a crawl, or if redirection.io is already installed on your website and has collected some data, you can choose on one of the proposed URLs to start the crawl.

Depending on the number of pages of the website and its performance capacities, the crawl can take a few seconds to a few hours. But you can close your browser, the crawl will continue to run in background. You can come back later to see the results, or you can be notified when the crawl is finished.

Advanced settings

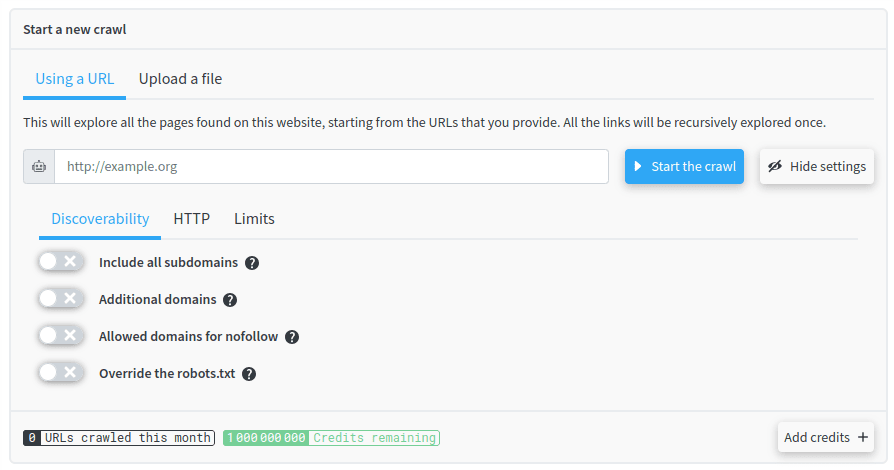

You can also configure the way redirection.io explores your website by clicking on the Advanced settings button.

Under the Discoverability tab, you can configure how the crawler will explore your website:

- Include all subdomains: If enabled, the crawler also explores all the links from discovered subdomains. Use with caution, this can generate a lot of traffic. For example, if the crawler starts on

example.comand finds absolute links that targetsub.example.com, it will also crawl these links (and all the links that they themselves contain). - Additional domains: Write here other domains to crawl. If an url is discovered on any of these domains, it will be crawled and explore.

- Allowed domains for nofollow: Write here other domains to allow. When redirection.io discovers a link to an external domain, it will raise an error if the

rel=nofollowattribute is missing, except if the target domain is in this list. - Override the robots.txt. By default, the redirection.io crawler respects the

robots.txtdirectives of the crawled domains. However, you can replace the defaultrobots.txtfile with a custom one, which is useful if you need to crawl more URLs than the defaultrobots.txtfile allows.

Under the HTTP tab, you can configure how the crawler interacts with your website:

- Automatic rate limiting: Our crawler engine automatically adapts and optimizes the crawl rate to match the website capacities, without generating slowdowns or issues on your website. However, you can bypass this feature and force a specific rate limit and define the number of URLs that the crawler will download concurrently.

- Disable SSL checking: If this options is enabled, the crawler allows insecure server connections when using SSL. If it is missused, this option can lead to security issues as it allows to crawl pages that have an invalid or self-signed SSL certificate. This can however be useful if your website is not yet available in production.

- HTTP authentication: It allows to set

Authorization: basic <value>header. This setting is useful to crawl staging websites, for example, that are protected by a Basic HTTP authentication. Passwords are stored encrypted on redirection.io’s infrastructure. - Custom user-agent: You can set a custom user-agent for the crawler. This can be useful if you want to simulate a specific browser, or if you want to identify the crawler in your logs. The default value is

Mozilla/5.0 (compatible; redirection-io/1.0; +https://redirection.io/). - Hostname overrides: With this option, you can override the DNS resolution (the IP that is associated with an hostname) of the domains that are crawled. This is useful if you want to crawl a website that is not available on the public internet, for example.

Under the Limits tab, you can define when a crawl must end:

- Unlimited crawled URLs: If enabled, the crawler continues to crawl until it has found all the URLs on the website, regardless of the number of URLs. If disabled, the crawler stops when it has found the maximum number of URLs.

- Unlimited crawl depth: If enabled, the crawler continues to crawl until it has found all the URLs on the website regardless of the depth of the website. If disabled, the crawler does not follow links that are deeper than the maximum depth.

- Unlimited crawl duration: If enabled, the crawler continues to crawl until it has found all the URLs on the website, regardless of the time it takes.

When you have configured the behavior of the crawler, click on the Start the crawl button to let the crawler start.

What happens while the crawl is running

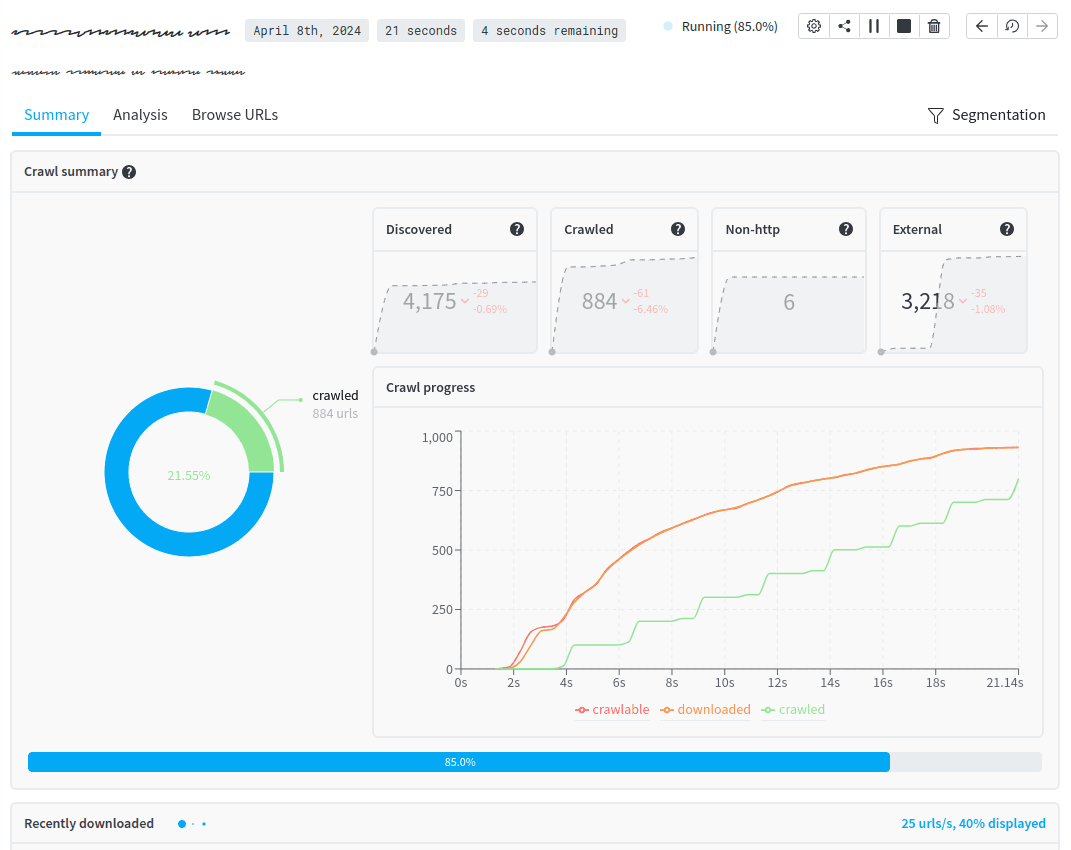

Once you have started a crawl, you can see view the progress of the crawl:

In the Crawl summary pannel, there are 3 main sections:

- on the top right

- dicovered URLs: the number of unique URLs that have been identified by the crawler. This number includes all the URLs (the one that have been crawled, the ones that have not been crawled yet, the non-HTTP URLs, external links, etc.)

- crawled URLs: the number of URLs that have been crawled (that is, that have been both downloaded and analyzed)

- non-HTTP URLs: the number of URLs that do not use the HTTP(s) protocol (for example,

mailto:,ftp://ortel:links) - external URLs: the number of URLs that are not on the same domain as the starting URL

- on the bottom right: a chart showing the number of URLs over time:

- crawlable URLs: URLs that can be crawled

- download URLs: URLs that have already been downloaded by the crawler

- crawled URLs: URLs that have been crawled (downloaded and analyzed);

- on the left part, the ratio between the number of URLs crawled over the number of external URLs;

In the recently downloaded panel, you can see in real-time the list of URLs that are being downloaded.

Once a crawl is finished, you can analyze the results.

Actually, you do not need to wait for the crawl to finish to start analyzing the results. Right after the crawl is started, you can already access partial results and start analyzing them, under the Analysis and Browse URLs tabs of the crawler interface.

Start a crawl from a list of URLs

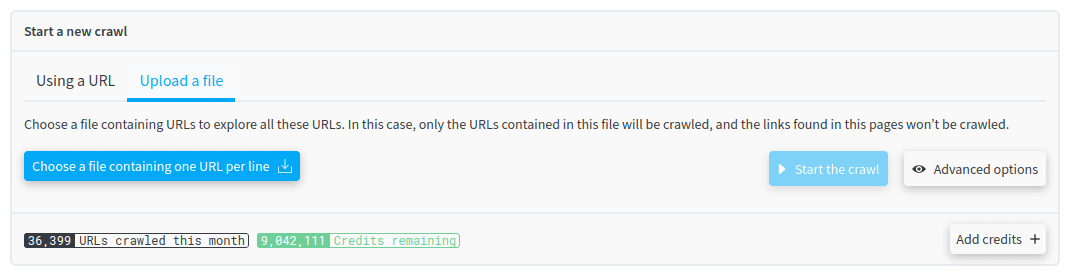

Until now, we have seen how to start a crawl from a given URL. But what if you want to crawl a list of URLs, for example to check if they are still valid?

The redirection.io crawler allows you to do that! If you want to monitor a specific list of URLs, you can upload a text file containing all the URLs that you need to crawl.

The file must contains one absolute URL per line:

As always, you can configure the crawl settings by clicking on the Advanced settings button.

Scheduling crawls

Scheduling a crawl allows to run it on a regular basis. The results of the crawl will be available under the "Crawls" tab, as if it had been manually triggered.

Scheduling crawls on a weekly or monthly basis is a good way to monitor the evolution of your website: you can see if the number of 404 errors is decreasing, if the number of pages is increasing, etc.