User documentation

- What is redirection.io?

- Starter's guide

- What are organizations and projects?

- Invite new collaborators

- User account and preferences

- Using traffic logs

- Create a rule

- Triggers and markers reference

- Actions reference

- How to bulk-import or export redirection rules?

- Managing instances

- Project notifications

- Project segmentation

- How much does it cost?

- Can I use redirection.io for free?

- About us

Developer documentation

- TL;DR; Fast track

- Installation of the agent

- Upgrading the agent

- Agent configuration reference

- Available integrations

- The agent as a reverse proxy

- nginx module

- Apache module

- platform.sh integration

- Cloudflare Workers integration

- Fastly Compute@Edge integration

- Vercel Middleware Integration

- Using redirection.io with Docker

- How fast is it?

- Public API

Crawler

Managed instances

Knowledge base

Legacy versions

The instructions below have been customized for your project "".

Customize these instructions for the project

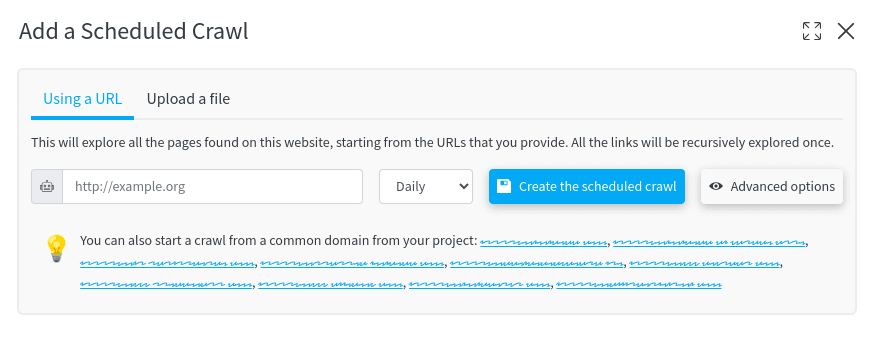

Schedule a crawl

Scheduling a crawl allows to run it on a regular basis. The results of the crawl will be available under the "Crawls" tab, as if it add been manually triggered.

Running crawls regularly helps you to keep track of the evolution of your website and be informed of regressions, new issues, etc.

All the settings of the crawl can be configured as for a manual crawl. But you can also choose the frequency of the crawl, which can be set to:

- daily

- weekly

- or monthly

Once a crawl is finished, you can analyze the results.

You can get notified when a crawl is finished by setting up a notification channel. In the case of weekly or monthly crawls, this can be useful to be informed of the results directly in your mailbox and keep an eye on the evolution of your website.